|

|

Welcome, humans. |

So, Sam and Elon went at it on X again, this time Elon accusing ChatGPT of being unsafe, with Sam leveling the same accusation back at Elon re: Tesla Autopilot. Meanwhile, the progress in AI images has been dramatic over the past 2.5 years, as demonstrated by the classic Will Smith spaghetti test: |

|

What do these two things have in common? They're both messy AF. |

Here's what happened in AI today: |

Anthropic CEO Dario Amodei criticized U.S. chip exports to China at Davos. A PwC survey found 56% of CEOs saw no ROI from AI spending despite massive investments Liquid AI dropped a reasoning model that can run on your phone. Ukraine announced plans to share wartime combat data with allies to train AI military models.

|

|

Later today at 1pm ET: Corey's hosting a free live session on what enterprise buyers actually care about when evaluating AI tools—plus how vendors can stop misreading the room. Register for free here. |

|

Anthropic's CEO Says We're 6-12 Months From AI That Does Most Coding…And He's Worried |

|

DEEP DIVE: Everything Anthropic CEO Dario Amodei said at Davos |

Dario Amodei doesn't usually do media blitzes, but when he does, they come with, how do we say this… an air for the dramatic? So when the Anthropic CEO showed up at Davos and delivered three, separate, interviews warning about AI's trajectory, people paid attention. |

The headline-grabber? He called the potential sale of US AI chips to China "a bit like selling nuclear weapons to North Korea." |

But that soundbite undersells what he actually laid out. Here's what Dario said is coming next (and why you should care): |

On timelines: |

|

On economics: |

|

On the nightmare scenario: |

|

In the best talk of the three, Demis Hassabis, Google DeepMind's CEO, pushed back slightly on timelines, noting that coding is easier to automate than scientific discovery because you can verify results instantly. |

But even he admitted: "I think we're going to see this year the beginnings of maybe impacting the junior level entry level kind of jobs." |

Meanwhile, Anthropic keeps executing. |

|

What this means for you: If Dario's right, we have 1-2 years to adapt to AI that matches human-level cognition across most tasks. If Demis is right, that timeline is more like 3-5, or even 10 years out. And both of them are actually cool with the pace slowing down a bit if they could get Chinese firms to agree (for the record, Demis says they are about 6 months behind the US atm). |

The main thing to watch for? Progress on AI systems improving the training and building of other AI systems (the self improvement loop). If progress on that starts to take off, so will everything else. |

We'll leave you with Demis' advice for would-be junior level employees or interns who might have trouble finding work this year: take the time off to play with the tools. |

He says you should try to get unbelievably proficient at using them to "leapfrog" yourself into being useful in your profession. You might even get better at using them than the researchers themselves, who spend all their time building and not enough exploring the "capability overhang" of the current and future generations of tools. |

You hear that, young folk? Get better at AI than Demis, get a job. What, like it's hard? |

|

FROM OUR PARTNERS |

Agents that don't suck |

|

Are your agents working? Most agents never reach production. |

Agent Bricks helps you build high-quality agents grounded in your data. We mean "high-quality" in the practical sense: accurate, reliable and built for your workflows. |

Generic benchmarks don't cut it. Agent Bricks measures performance on the tasks that matter to your business. |

Evaluate agents automatically, and keep improving accuracy with human feedback. With research-backed techniques for building, evaluating and optimizing, you can turn your business data into production agents faster — with governance built in from day one. |

See how Agent Bricks works |

|

Prompt Tip of the Day |

Claude on web is just so good at hyperlinking links now. We've got the instructions in our projects, skills, and memory down so that whenever we provide a link (or multiple links), it hyperlinks them all in the body of the text it writes for us automatically. To do the same (or add your own rules), try something like this (w/ code execution on): |

Every time I provide a link, always hyperlink it in the body of the text for me (as opposed to at the end), typically on the main action or subject; only link the 2-3 key words max. [If pasting the body of the text to reference and the link for multiple sources]: Make sure to hyperlink all links provided in context where the referenced content is used.

|

|

Treats to Try |

*Asterisk = from our partners (only the first one!). Advertise to 600K readers here! |

|

*Speak naturally. Wispr Flow turns it into clean, final-draft text with punctuation and lists, ready to paste anywhere. Give your hands a break ➜ start flowing for free today. Legato embeds AI-powered app creation directly into SaaS platforms; your users describe what they need in plain language like "alert me weekly about employees missing compliance training" and Legato generates production-ready apps, workflows, and automations directly inside your product (raised $7M). LTX launched audio-to-video features. upload your audio and generate video where lip-sync, motion, and actions follow the rhythm of your sound. Skills.sh works like npm for coding agents—type npx skills add stripe/ai to instantly teach Claude Code or Cursor your company's best practices. Adam replaces CAD clicks with prompts—type "add a fillet here" to edit parts, optimize feature trees by merging duplicates, and convert ad-hoc models into parametric designs with cascading variables. Overworld generates playable game worlds at 60fps on your laptop—walk through a forest and the world updates continuously as you move (demo). TuCuento generates choose-your-own-adventure stories—pick your kid's name and favorite animal, select values like courage, then make decisions together scene by scene—free tier (3 stories/month), then $3.99/month.

|

|

Around the Horn |

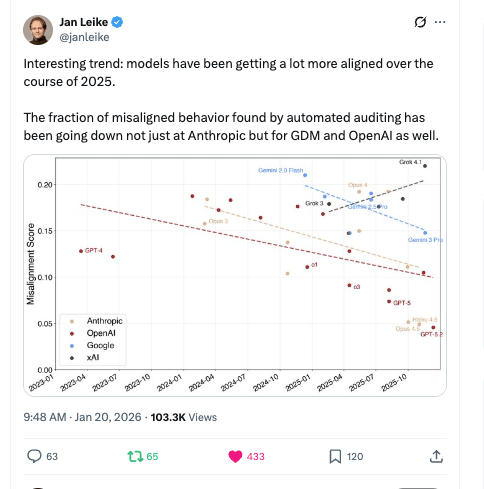

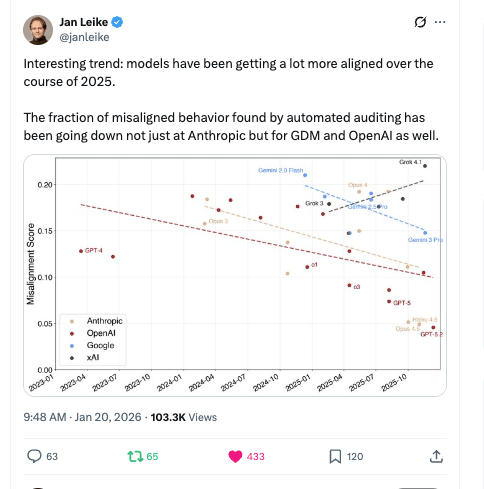

| Good news for model alignment! |

|

Isomorphic Labs, the Google DeepMind spinout led by Demis Hassabis, signed its third pharma deal, partnering with Johnson & Johnson to design AI-generated drugs across multiple molecule types (other collabs include Novartis and Eli Lilly). OpenAI introduced age prediction for ChatGPT that will assess behavioral and account-level signals to identify minors and automatically apply content filters. Google warned grid connection delays (4-12 years) are now the #1 barrier to building data centers, forcing hyperscalers to co-locate next to power plants—but this creates single-point-of-failure risks since you lose the redundancy of drawing from multiple grid sources. OpenAI and ServiceNow signed a multi-year deal to embed OpenAI's AI models directly into ServiceNow's enterprise software, enabling AI agents that can take autonomous actions like handling IT support tasks and customer service rather than just providing suggestions. A PwC survey of 4,454 business leaders found that 56% of CEOs reported seeing neither increased revenue nor decreased costs from AI investments, with only 12% experiencing both lower costs and higher revenue. NVIDIA invested $150M in AI inference startup Baseten, which provides software infrastructure that optimizes how AI models run on GPUs (they raised $300M total). BioticsAI gained FDA approval for its AI-powered fetal ultrasound abnormality detection tool. Humans&, an AI startup founded by former researchers from Anthropic, xAI, and Google that aims to build AI collaboration tools focused on empowering people rather than replacing them, raised a $480M million seed round from investors including NVIDIA, Jeff Bezos, and Google Ventures. Ukraine announced it will share wartime combat data with allies to train AI military models, including millions of hours of drone footage collected since Russia's 2022 invasion.

|

|

Midweek Model |

Liquid AI released LFM2.5-1.2B-Thinking, a reasoning model capable of running on any phone with 900MB that beats Qwen3-1.7B (which has 40% more parameters) on math, tool use, and instruction following (try it here). |

The big deal here is the small size and high intelligence that makes reasoning actually work on-device. Think of standard AI models like having to send your question to a server farm, wait for processing, then get an answer back. |

This model flips that: it runs entirely on your phone with less than 1GB of memory. The key innovation is "thinking traces", where the model generates internal reasoning steps before producing answers, similar to how OpenAI's o-series models work, but compressed into a tiny package. |

It beats models with 40% more parameters because of massive training (28 trillion tokens) and reinforcement learning that taught it to think more efficiently. |

This matters for edge AI: running models locally without cloud dependency. If you have an Android, you could use this with ChatterUI or LocallyAI for iOS. |

|

|

| You're never too old to learn (one could even say learning keeps you young…) |

|

|

| That's all for now. | | | What'd you think of today's email? | |

|

|

P.P.S: Love the newsletter, but only want to get it once per week? Don't unsubscribe—update your preferences here. |

No comments:

Post a Comment

Keep a civil tongue.