| In partnership with |  |

| Welcome, humans. | AI industry insiders just launched Poison Fountain, a site that feeds corrupted training data to AI crawlers to intentionally break the models. The kicker? Some allegedly work at major AI companies. | They're citing Geoffrey Hinton's existential warnings and arguing regulation's too slow. Their solution? Poison the well before it's too late. It's giving "call is coming from inside the house" energy—working on AI by day, sabotaging it by night! | Here's what happened in AI today: | Former OpenAI policy chief launched nonprofit pushing for independent AI safety audits. Bandcamp banned AI-generated music from its platform. Micron said AI-driven memory chip shortage is "unprecedented" and will last beyond 2026. Google said Gemini will stay ad-free as OpenAI tests ads in ChatGPT.

| | Don't forget: Check out our podcast, The Neuron: AI Explained on Spotify, Apple Podcasts, and YouTube — new episodes air every week on Tuesdays after 2pm PST! | | Former OpenAI Insider Says AI Companies Shouldn't Grade Their Own Homework | Miles Brundage spent seven years at OpenAI figuring out policy for AGI. Now he's launching a nonprofit with one provocative argument. AI labs are basically writing their own report cards, and nobody's checking their work. | His new organization, AVERI (AI Verification and Evaluation Research Institute), just launched with $7.5M in funding and a mission to push for independent safety audits of frontier AI models. Think of it like how your vacuum cleaner's battery gets tested by independent labs so it doesn't catch fire except for AI systems that could potentially reshape society. | Right now, here's how AI safety testing works: | Companies test themselves: Leading AI labs conduct their own safety evaluations and publish whatever results they want, with no external verification required. No universal standards: Each company decides what tests to run, how rigorous they should be, and what counts as "safe enough." Trust us, bro: Consumers, businesses, and governments just have to take the companies' word that everything's fine.

| AVERI published a research paper (coauthored by 30+ AI safety researchers) proposing "AI Assurance Levels" ranging from basic third-party testing all the way to "treaty grade" assurance for international agreements. They're not planning to do audits themselves. Instead, they want existing audit firms or new startups to take on this work. | Who might force AI companies to actually do this? Brundage sees several pressure points. Insurance companies requiring audits before underwriting policies, investors demanding independent verification before writing billion-dollar checks, or customers refusing to buy unaudited AI systems. The EU AI Act already hints at this direction for "high-risk" AI deployments. | Why this matters: The big challenge is finding qualified auditors, not just establishing standards. AI safety auditing requires rare combo of technical AI expertise and governance knowledge, and everyone with those skills is getting lucrative offers from the companies they'd be auditing. Brundage's solution? Build "dream teams" mixing people from audit firms, cybersecurity, AI safety nonprofits, and academia. | | FROM OUR PARTNERS | Introducing the first AI-native CRM | | Connect your email, and you'll instantly get a CRM with enriched customer insights and a platform that grows with your business. | With AI at the core, Attio lets you: | Prospect and route leads with research agents Get real-time insights during customer calls Build powerful automations for your complex workflows

| Join industry leaders like Granola, Taskrabbit, Flatfile and more. | 👉Try Attio Pro for free | | Prompt Tip of the Day | January's almost over, and if you're anything like us, you're wondering whether you're actually on track for 2026 or just pretending everything's fine. | Here's a brutally honest prompt that turns ChatGPT or Claude into your accountability coach with no sugarcoating, just real talk about whether you're headed toward your goals or quietly sabotaging them. | I want you to act as my calm, honest, and practical accountability coach. Your job is to help me review how January 2026 went in relation to my goals for 2026, and to tell me clearly whether I'm on track, slightly behind, or seriously lagging in a way that matters for the rest of the year. First, I'll give you context. Then I want you to analyze, challenge gently where needed, and end with a simple, concrete plan for the coming months. Here is my information:

1. My 2026 goals: [Paste your goals with metrics and deadlines]

2. What actually happened in January 2026: [Be honest about what you did and didn't do]

3. How I feel about January: [Your emotional take in 1-2 sentences] Using this information, do the following in clear sections:

A. Quick snapshot – Summarize January in 3-5 bullets and label each goal as "On track," "Slightly behind but recoverable," or "At risk."

B. Signal vs noise – Tell me which parts are just "January noise" vs true signals about my likely behavior this year.

C. Pattern check – Point out 2-4 patterns in my behavior and whether they push me closer to or further from my goals.

D. Risk assessment – For each goal, what's the realistic risk I'll miss it if I keep doing what I did in January?

E. Vibe check – Reflect my emotional state back to me, plus one thing I can credit myself for and one hard truth I need to accept.

F. Practical adjustments – Suggest 3-5 specific changes for February-April with clear explanations of how each protects a 2026 goal.

G. Simple monthly tracking – Design a lightweight system with 3-5 key metrics I should track each month.

H. Final clarity – End with overall status, single most important focus for next 30 days, and one sentence of grounded encouragement. |

|

| Our favorite insight: Unlike generic "how's your year going?" check-ins, this prompt forces you to confront the gap between what you said you'd do and what you actually did—then gives you a concrete plan instead of vague motivation. The "signal vs noise" section is particularly useful for distinguishing between normal January chaos and patterns that'll sink your whole year if you ignore them. | | Treats to Try | Listen Labs uses AI interviewers to run adaptive video customer research at scale, letting you screen panelists and search through hundreds of interviews in one hub (raised $69M). Runpod hosts AI apps with easily configured GPU hardware and serverless options, now serving 500K developers including OpenAI, Replit, and Cursor (raised $20M). Pocket TTS (TTS = text to speech) clones voices from 5-second audio samples and runs 6x faster than real-time on your laptop's CPU with no GPU (demo); its 100M params beats larger models in quality using continuous latents instead of tokens (paper, from Kyutai's €300M French AI lab)—free to try. GPT-5.2-Codex executes multi-hour coding tasks like building features, refactoring entire codebases, and debugging complex bugs—now available via API after being Codex-only. Mercury and Mercury Coder are blazing-fast diffusion LLMs for text and code generation you can drop into your projects. Flashback to our interview with Inception Labs founder Stefano Ermon.

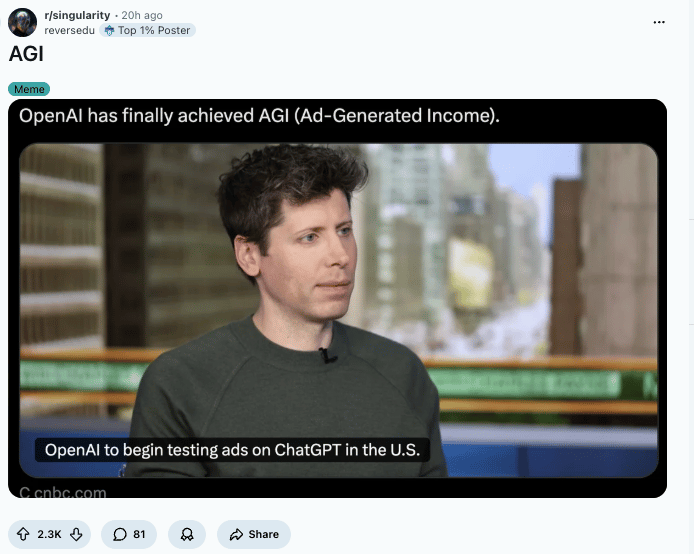

| | Around the Horn | Bandcamp banned music generated wholly or substantially by AI from its platform, prohibiting AI impersonation of artists while allowing human creators to flag suspected AI content for removal. Micron called the AI-driven memory chip shortage "unprecedented" and said it will extend beyond 2026 as demand for high-bandwidth memory consumes available capacity. Google said Gemini will stay ad-free as OpenAI starts testing ads in ChatGPT within weeks for free-tier users. Elon Musk's xAI expanded its Colossus supercomputer to 2 GW with 555,000 GPUs purchased for $18B, making it the world's largest AI training facility—4x bigger than the next competitor. Brookings Institution report found that AI risks in schools currently outweigh benefits, warning it can "undermine children's foundational development" and create cognitive decline. People are committing to "analog lifestyles" in 2026 as backlash to AI—Michael's saw searches for "analog hobbies" jump 136% and yarn kit sales soar 1,200%. Data centers are projected to consume up to 12% of US electricity by 2028, driving residential electricity rates up 5.2% and straining America's aging grid. California teen Sam Nelson died from an overdose after ChatGPT coached him on drug combinations over several months, his mother said.

| | FROM OUR PARTNERS | What's "Accurate Enough" for AI? | | Speed, iteration, and self-correction matter more than perfection when using AI in production. Learn how early signals help teams stay in flow and make better decisions, faster. | Read the Honeycomb blog here | | Monday Meme | | | |

| | | That's all for now. | | | What'd you think of today's email? | |

|

| P.S: Before you go… have you subscribed to our YouTube Channel? If not, can you? |  | Click the image to subscribe! |

| P.P.S: Love the newsletter, but only want to get it once per week? Don't unsubscribe—update your preferences here. |

|

No comments:

Post a Comment

Keep a civil tongue.